Learn about Oracle NoSQL Database Migrator and how to use it for data migration.

Oracle NoSQL Database Migrator is a tool that enables you to migrate Oracle NoSQL tables from one data source to another. This tool can operate on tables in Oracle NoSQL Database Cloud Service , Oracle NoSQL Database on-premises and AWS S3. The Migrator tool supports several different data formats and physical media types. Supported data formats are JSON, Parquet, MongoDB-formatted JSON, DynamoDB-formatted JSON, and CSV files. Supported physical media types are files, OCI Object Storage, Oracle NoSQL Database on-premises, Oracle NoSQL Database Cloud Service and AWS S3.

This article has the following topics:

Related Topics

Oracle NoSQL Database Migrator lets you move Oracle NoSQL tables from one data source to another, such as Oracle NoSQL Database on-premises or cloud or even a simple JSON file.

There can be many situations that require you to migrate NoSQL tables from or to an Oracle NoSQL Database . For instance, a team of developers enhancing a NoSQL Database application may want to test their updated code in the local Oracle NoSQL Database Cloud Service (NDCS) instance using cloudsim. To verify all the possible test cases, they must set up the test data similar to the actual data. To do this, they must copy the NoSQL tables from the production environment to their local NDCS instance, the cloudsim environment. In another situation, NoSQL developers may need to move their application data from on-premise to the cloud and vice-versa, either for development or testing.

In all such cases and many more, you can use Oracle NoSQL Database Migrator to move your NoSQL tables from one data source to another, such as Oracle NoSQL Database on-premise or cloud or even a simple JSON file. You can also copy NoSQL tables from a MongoDB-formatted JSON input file, DynamoDB-formatted JSON input file (either stored in AWS S3 source or from files), or a CSV file into your NoSQL Database on-premises or cloud.

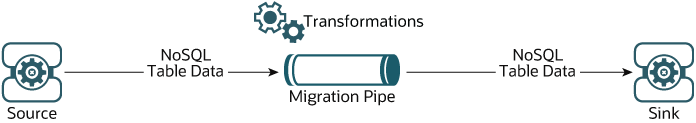

As depicted in the following figure, the NoSQL Database Migrator utility acts as a connector or pipe between the data source and the target (referred to as the sink). In essence, this utility exports data from the selected source and imports that data into the sink. This tool is table-oriented, that is, you can move the data only at the table level. A single migration task operates on a single table and supports migration of table data from source to sink in various data formats.

Oracle NoSQL Database Migrator is designed such that it can support additional sources and sinks in the future. For a list of sources and sinks supported by Oracle NoSQL Database Migrator as of the current release, see Supported Sources and Sinks.

Description of the illustration migrator_overview.eps

Learn about the different terms used in the above diagram, in detail.

The NoSQL Database Migrator tool supports different types of sources and sinks (that is physical media or repositories of data) and data formats (that is how the data is represented in the source or sink). Supported data formats are JSON, Parquet, MongoDB-formatted JSON, DynamoDB-formatted JSON, and CSV files. Supported source and sink types are files, OCI Object Storage, Oracle NoSQL Database on-premise, and Oracle NoSQL Database Cloud Service .

< "source": < "type" : source type>, //source-configuration for type. See Source Configuration Templates . >, "sink": < "type" : sink type>, //sink-configuration for type. See Sink Configuration Templates . >, "transforms" : < //transforms configuration. See Transformation Configuration Templates . >, "migratorVersion" : "migrator version>", "abortOnError" : > | Group | Parameters | Mandatory (Y/N) | Purpose | Supported Values |

|---|---|---|---|---|

| source | type | Y | Represents the source from which to migrate the data. The source provides data and metadata (if any) for migration. | To know the type value for each source, see Supported Sources and Sinks. |

| source | source-configuration for type | Y | Defines the configuration for the source. These configuration parameters are specific to the type of source selected above. | See Source Configuration Templates . for the complete list of configuration parameters for each source type. |

| sink | type | Y | Represents the sink to which to migrate the data. The sink is the target or destination for the migration. | To know the type value for each source, see Supported Sources and Sinks. |

| sink | sink-configuration for type | Y | Defines the configuration for the sink. These configuration parameters are specific to the type of sink selected above. | See Sink Configuration Templates for the complete list of configuration parameters for each sink type. |

| transforms | transforms configuration | N | Defines the transformations to be applied to the data in the migration pipe. | See Transformation Configuration Templates for the complete list of transformations supported by the NoSQL Data Migrator. |

| - | migratorVersion | N | Version of the NoSQL Data Migrator | - |

| - | abortOnError | N | Specifies whether to stop the migration activity in case of any error or not. The default value is true indicating that the migration stops whenever it encounters a migration error. If you set this value to false , the migration continues even in case of failed records or other migration errors. The failed records and migration errors will be logged as WARNINGs on the CLI terminal. | true, false |

Note: As JSON file is case-sensitive, all the parameters defined in the configuration file are case-sensitive unless specified otherwise.

This topic provides the list of the sources and sinks supported by the Oracle NoSQL Database Migrator .

You can use any combination of a valid source and sink from this table for the migration activity. However, you must ensure that at least one of the ends, that is, source or sink must be an Oracle NoSQL product. You can not use the NoSQL Database Migrator to move the NoSQL table data from one file to another.

Oracle NoSQL Database

( nosqldb )

Oracle NoSQL Database Cloud Service

( nosqldb_cloud )

File system

( file )

MongoDB JSON

( mongodb_json )

DynamoDB JSON

( dynamodb_json )

OCI Object Storage

( object_storage_oci )

MongoDB JSON

( mongodb_json )

DynamoDB JSON

( dynamodb_json )

Many configuration parameters are common across the source and sink configuration. For ease of reference, the description for such parameters is repeated for each source and sink in the documentation sections, which explain configuration file formats for various types of sources and sinks. In all the cases, the syntax and semantics of the parameters with the same name are identical.

Some of the source and sink types have optional or mandatory security information for authentication purposes.

All sources and sinks that use services in the Oracle Cloud Infrastructure (OCI) can use certain parameters for providing optional security information. This information can be provided using an OCI configuration file or Instance Principal.

Oracle NoSQL Database sources and sinks require mandatory security information if the installation is secure and uses an Oracle Wallet-based authentication. This information can be provided by adding a jar file to the < MIGRATOR_HOME >/lib directory.

If an Oracle NoSQL Database installation uses Oracle Wallet-based authentication, you need an additional jar file that is part of the EE installation. For more information, see Oracle Wallet.

Without this jar file, you will get the following error message:

Could not find kvstore-ee.jar in lib directory. Copy kvstore-ee.jar to lib directory.

To prevent the exception shown above, you must copy the kvstore-ee.jar file from your EE server package to the < MIGRATOR_HOME >/lib directory. < MIGRATOR_HOME >is the nosql-migrator-M.N.O/ directory created by extracting the Oracle NoSQL Database Migrator package and M.N.O represent the software release.major.minor numbers. For example, nosql-migrator-1.1.0/lib .

The wallet-based authentication is supported ONLY in the Enterprise Edition (EE) of Oracle NoSQL Database .

Authenticating with Instance Principals

Instance principals is an IAM service feature that enables instances to be authorized actors (or principals) that can perform actions on service resources. Each compute instance has its own identity, and it authenticates using the certificates added to it.

Oracle NoSQL Database Migrator provides an option to connect to a NoSQL cloud and OCI Object Storage sources and sinks using instance principal authentication. It is only supported when the NoSQL Database Migrator tool is used within an OCI compute instance, for example, the NoSQL Database Migrator tool running in a VM hosted on OCI. To enable this feature use the useInstancePrincipal attribute of the NoSQL cloud source and sink configuration file. For more information on configuration parameters for different types of sources and sinks, see Source Configuration Templates and Sink Configuration Templates .

For more information on instance principals, see Calling Services from an Instance.

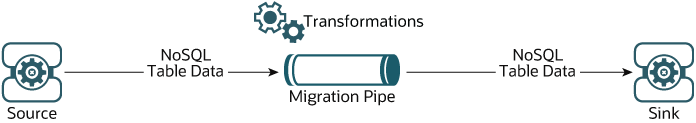

Learn about the various steps involved in using the Oracle NoSQL Database Migrator utility for migrating your NoSQL data.

The high level flow of tasks involved in using NoSQL Database Migrator is depicted in the below figure.

Description of the illustration migrator_flow.eps

Download the NoSQL Data Migrator Utility

The Oracle NoSQL Database Migrator utility is available for download from the Oracle NoSQL Downloads page. Once you download and unzip it on your machine, you can access the runMigrator command from the command line interface.

Oracle NoSQL Database Migrator utility requires Java 11 or higher versions to run.

Identify the Source and Sink

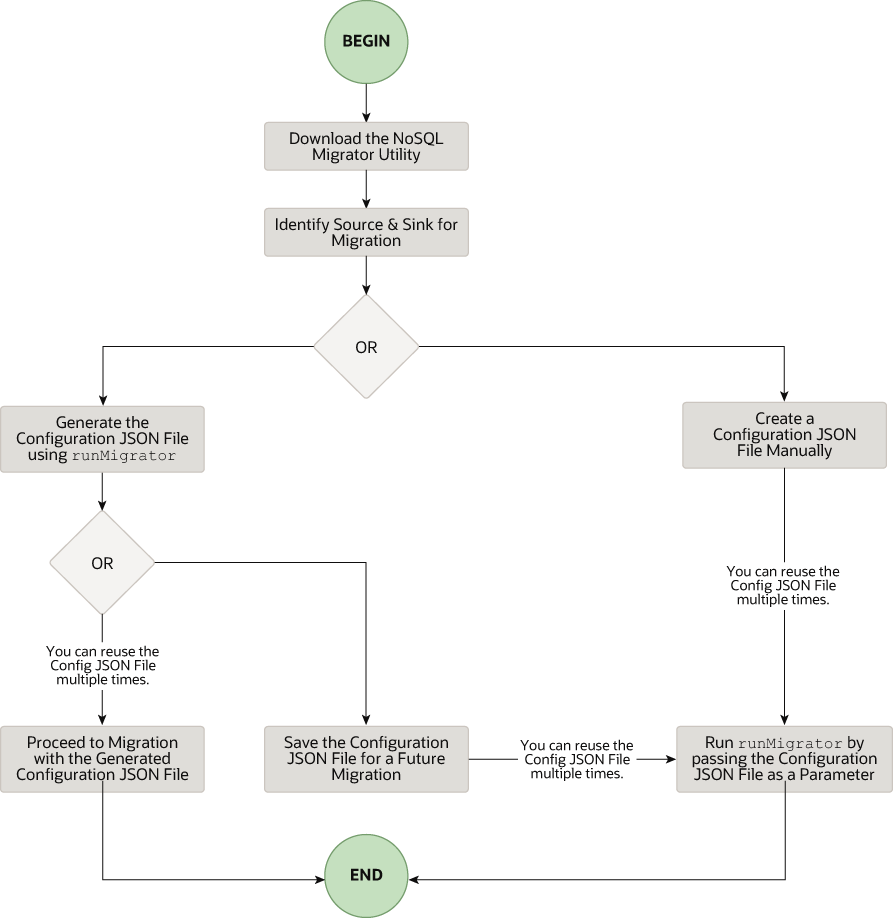

CREATE TABLE IF NOT EXISTS tablename>(ID STRING, DOCUMENT JSON,PRIMARY KEY(SHARD(ID))Where: — tablename = value provided for the table attribute in the configuration. — value from each document of the mongoDB exported JSON source file. — DOCUMENT = For each document in the mongoDB exported file, the contents excluding the _id field are aggregated into the DOCUMENT column.

If the source is a DynamoDB-formatted JSON file, the default schema for the table will be as follows:

CREATE TABLE IF NOT EXISTS (DDBPartitionKey_name DDBPartitionKey_type, [DDBSortKey_name DDBSortKey_type],DOCUMENT JSON, PRIMARY KEY(SHARD(DDBPartitionKey_name),[DDBSortKey_name]))Where: — TABLE_NAME = value provided for the sink table in the configuration — DDBPartitionKey_name = value provided for the partition key in the configuration — DDBPartitionKey_type = value provided for the data type of the partition key in the configuration — DDBSortKey_name = value provided for the sort key in the configuration if any — DDBSortKey_type = value provided for the data type of the sort key in the configuration if any — DOCUMENT = All attributes except the partition and sort key of a Dynamo DB table item aggregated into a NoSQL JSON column If the source format is a CSV file, a default schema is not supported for the target table. You can create a schema file with a table definition containing the same number of columns and data types as the source CSV file. For more details on the Schema file creation, see Providing Table Schema.

For all the other sources, the default schema will be as follows:CREATE TABLE IF NOT EXISTS tablename> (ID LONG GENERATED ALWAYS AS IDENTITY, DOCUMENT JSON, PRIMARY KEY(ID))Where: — tablename = value provided for the table attribute in the configuration. — auto-generated LONG value.

— DOCUMENT = The JSON record provided by the source is aggregated into the DOCUMENT column.Note: If the _id value is not provided as a string in the MongoDB-formatted JSON file, NoSQL Database Migrator converts it into a string before inserting it into the default schema.

Description of the illustration source_sink_schema_example.png

The table schema file, for example, mytable_schema.ddl can include table DDL statements. The NoSQL Database Migrator tool executes this table schema file before starting the migration. The migrator tool supports no more than one DDL statement per line in the schema file. For example,

CREATE TABLE IF NOT EXISTS(id INTEGER, name STRING, age INTEGER, PRIMARY KEY(SHARD(ID)))Migration will fail if the table is present at the sink and the DDL in the schemaPath is different than the table.

Note: If the source is a CSV file, create a file with the DDL commands for the schema of the target table. Provide the file path in schemaInfo.schemaPath parameter of the sink configuration file.

Migrating TTL Metadata for Table Rows

You can choose to include the TTL metadata for table rows along with the actual data when performing migration of NoSQL tables. The NoSQL Database Migrator provides a configuration parameter to support the export and import of table row TTL metadata. Additionally, the tool provides an option to select the relative expiry time for table rows during the import operation. You can optionally export or import TTL metadata using the includeTTL parameter.

The support for migrating TTL metadata for table rows is only available for Oracle NoSQL Database and Oracle NoSQL Database Cloud Service .

Exporting TTL metadata

When a table is exported, TTL data is exported for the table rows that have a valid expiration time. If a row does not expire, then it is not included explicitly in the exported data because its expiration value is always 0. TTL information is contained in the _metadata JSON object for each exported row. The NoSQL Database Migrator exports the expiration time for each row as the number of milliseconds since the UNIX epoch (Jan 1st, 1970). For example,

//Row 1 < "id" : 1, "name" : "xyz", "age" : 45, "_metadata" : < "expiration" : 1629709200000 //Row Expiration time in milliseconds >> //Row 2 < "id" : 2, "name" : "abc", "age" : 52, "_metadata" : < "expiration" : 1629709400000 //Row Expiration time in milliseconds >> //Row 3 No Metadata for below row as it will not expire

Importing TTL metadata

You can optionally import TTL metadata using a configuration parameter, includeTTL . The import operation handles the following use cases when migrating table rows containing TTL metadata. These use-cases are applicable only when the includeTTL configuration parameter is specified.

The reference time while importing is 1629707962582, which is Monday, August 23, 2021 8:39:22.582 AM.

Imported table rowImporting data to a sink with an IDENTITY column

You can import the data from a valid source to a sink table (On-premises/Cloud Services) with an IDENTITY column. You create the IDENTITY column as either GENERATED ALWAYS AS IDENTITY or GENERATED BY DEFAULT AS IDENTITY. For more information on table creation with an IDENTITY column, see Creating Tables With an IDENTITY Column in the SQL Reference Guide .

Before importing the data, make sure that the Oracle NoSQL Database table at the sink is empty if it exists. If there is pre-existing data in the sink table, migration can lead to issues such as overwriting existing data in the sink table or skipping source data during the import.

Sink table with IDENTITY column as GENERATED ALWAYS AS IDENTITY

Consider a sink table with the IDENTITY column created as GENERATED ALWAYS AS IDENTITY. The data import is dependent on whether or not the source supplies the values to the IDENTITY column and ignoreFields transformation parameter in the configuration file.

For example, you want to import data from a JSON file source to the Oracle NoSQL Database table as the sink. The schema of the sink table is:

CREATE TABLE IF NOT EXISTS migrateID(ID INTEGER GENERATED ALWAYS AS IDENTITY, name STRING, course STRING, PRIMARY KEY (ID))CASE 1: Source data does not supply a value for the IDENTITY field of the sink table.

Example: JSON source file sample_noID.json

Create/generate the configuration file.

Data migration is successful. IDENTITY column values are auto-generated. Migrated data in Oracle NoSQL Database sink table migrateID :

CASE 2: Source data supplies values for the IDENTITY field of the sink table.

Example: JSON source file sampleID.json

Create/generate the configuration file. You provide an ignoreFields transformation for the ID column in the sink configuration template.

Data migration is successful. The supplied ID values are skipped and the IDENTITY column values are auto-generated.

Migrated data in Oracle NoSQL Database sink table migrateID :You create/generate the configuration file without the ignoreFields transformation for the IDENTITY column.

Data migration fails with the following error message:

For more details on the transformation configuration parameters, see the topic Transformation Configuration Templates.

Sink table with IDENTITY column as GENERATED BY DEFAULT AS IDENTITY

Consider a sink table with the IDENTITY column created as GENERATED BY DEFAULT AS IDENTITY. The data import is dependent on whether or not the source supplies the values to the IDENTITY column and ignoreFields transformation parameter.

For example, you want to import data from a JSON file source to the Oracle NoSQL Database table as the sink. The schema of the sink table is:

CREATE TABLE IF NOT EXISTS migrateID(ID INTEGER GENERATED BY DEFAULT AS IDENTITY, name STRING, course STRING, PRIMARY KEY (ID))CASE 1: Source data does not supply a value for the IDENTITY field of the sink table.

Example: JSON source file sample_noID.json

Create/generate the configuration file.

Data migration is successful. IDENTITY column values are auto-generated. Migrated data in Oracle NoSQL Database sink table migrateID :

CASE 2: Source data supplies values for the IDENTITY field of the sink table and it is a Primary Key field.

Example: JSON source file sampleID.json

Create/generate the configuration file. You provide an ignoreFields transformation for the ID column in the sink configuration template (Recommended) .

Data migration is successful. The supplied ID values are skipped and the IDENTITY column values are auto-generated.

Migrated data in Oracle NoSQL Database sink table migrateID :You create/generate the configuration file without the ignoreFields transformation for the IDENTITY column.

Data migration is successful. The supplied ID values from the source are copied into the ID column in the sink table.

When you try to insert an additional row to the table without supplying an ID value, the sequence generator tries to auto-generate the ID value. The sequence generator's starting value is 1. As a result, the generated ID value can potentially duplicate one of the existing ID values in the sink table. Since this is a violation of the primary key constraint, an error is returned and the row does not get inserted.

See Sequence Generator for additional information.

To avoid the primary key constraint violation, the sequence generator must start the sequence with a value that does not conflict with existing ID values in the sink table. To use the START WITH attribute to make this modification, see the example below:

Example : Migrated data in Oracle NoSQL Database sink table migrateID :To find the appropriate value for the sequence generator to insert in the ID column, fetch the maximum value of the ID field using the following query:

SELECT max(ID) FROM migrateIDThe maximum value of the ID column in the sink table is 3. You want the sequence generator to start generating the ID values beyond 3 to avoid duplication. You update the sequence generator's START WITH attribute to 4 using the following statement:

ALTER Table migrateID (MODIFY ID GENERATED BY DEFAULT AS IDENTITY (START WITH 4))This will start the sequence at 4.

Now when you insert rows to the sink table without supplying the ID values, the sequence generator auto-generates the ID values from 4 onwards averting the duplication of the IDs.

For more details on the transformation configuration parameters, see the topic Transformation Configuration Templates.

Run the runMigrator command

The runMigrator executable file is available in the extracted NoSQL Database Migrator files. You must install Java 11 or higher version and bash on your system to successfully run the runMigrator command.

[~]$ ./runMigrator configuration file is not provided. Do you want to generate configuration? (y/n) [n]: y . . [~]$ ./runMigrator -c Logging Migrator Progress

NoSQL Database Migrator tool provides options, which enables trace, debugging, and progress messages to be printed to standard output or to a file. This option can be useful in tracking the progress of migration operation, particularly for very large tables or data sets.

$./runMigrator --log-level loglevel>$./runMigrator --log-level debug| Log Level | Description |

|---|---|

| warning | Prints errors and warnings. |

| info ( default ) | Prints the progress status of data migration such as validating source, validating sink, creating tables, and count of number of data records migrated. |

| debug | Prints additional debug information. |

| all | Prints everything. This level turns on all levels of logging. |

You can specify the name of the log file using --log-file or -f parameter. If --log-file is passed as run time parameter to the runMigrator command, the NoSQL Database Migrator writes all the log messages to the file else to the standard output.

$./runMigrator --log-file log file name>$./runMigrator --log-file nosql_migrator.logLearn how to perform data migration using the Oracle NoSQL Database Migrator for specific use cases. You can find detailed systematic instructions with code examples to perform migration in each of the use cases.

This article has the following topics:

This example shows how to use the Oracle NoSQL Database Migrator to copy data and the schema definition of a NoSQL table from Oracle NoSQL Database Cloud Service (NDCS) to a JSON file.

An organization decides to train a model using the Oracle NoSQL Database Cloud Service (NDCS) data to predict future behaviors and provide personalized recommendations. They can take a periodic copy of the NDCS tables' data to a JSON file and apply it to the analytic engine to analyze and train the model. Doing this helps them separate the analytical queries from the low-latency critical paths.

For the demonstration, let us look at how to migrate the data and schema definition of a NoSQL table called myTable from NDCS to a JSON file.

[DEFAULT] tenancy=ocid1.tenancy.oc1. user=ocid1.user.oc1. fingerprint= 43:d1. key_file= pass_phrase=

To migrate the data and schema definition of myTable from Oracle NoSQL Database Cloud Service to a JSON file:

[~/nosqlMigrator/nosql-migrator-1.0.0]$./runMigratorConfiguration file is not provided. Do you want to generate configuration? (y/n) [n]: y Generating a configuration file interactively. Enter a location for your config [./migrator-config.json]: /home//nosqlMigrator/NDCS2JSON Select the source: 1) nosqldb 2) nosqldb_cloud 3) file 4) object_storage_oci 5) aws_s3 #? 2 Configuration for source type=nosqldb_cloud Enter endpoint URL or region ID of the Oracle NoSQL Database Cloud: us-phoenix-1 Select the authentication type: 1) credentials_file 2) instance_principal 3) delegation_token #? 1 Enter path to the file containing OCI credentials [/home//.oci/config]: Enter the profile name in OCI credentials file [DEFAULT]: Enter the compartment name or id of the table []: developers Enter table name: myTable Include TTL data? If you select 'yes' TTL of rows will also be included in the exported data.(y/n) [n]: Enter percentage of table read units to be used for migration operation. (1-100) [90]: Enter store operation timeout in milliseconds. (1-30000) [5000]:Select the sink: 1) nosqldb 2) nosqldb_cloud 3) file #? 3 Configuration for sink type=file Enter path to a file to store JSON data: /home/apothula/nosqlMigrator/myTableJSON Would you like to store JSON in pretty format? (y/n) [n]: y Would you like to migrate the table schema also? (y/n) [y]: y Enter path to a file to store table schema: /home/apothula/nosqlMigrator/myTableSchemaWould you like to add transformations to source data? (y/n) [n]:Would you like to continue migration in case of any record/row is failed to migrate?: (y/n) [n]: generated configuration is: < "source": < "type": "nosqldb_cloud", "endpoint": "us-phoenix-1", "table": "myTable", "compartment": "developers", "credentials": "/home/apothula/.oci/config", "credentialsProfile": "DEFAULT", "readUnitsPercent": 90, "requestTimeoutMs": 5000 >, "sink": < "type": "file", "format": "json", "schemaPath": "/home/apothula/nosqlMigrator/myTableSchema", "pretty": true, "dataPath": "/home/apothula/nosqlMigrator/myTableJSON" >, "abortOnError": true, "migratorVersion": "1.0.0" >Note: If you select n , you can use the generated configuration file to run the migration using the ./runMigrator -c or the ./runMigrator --config option.

would you like to run the migration with above configuration? If you select no, you can use the generated configuration file to run the migration using ./runMigrator --config /home/apothula/nosqlMigrator/NDCS2JSON (y/n) [y]:Records provided by source=10,Records written to sink=10,Records failed=0. Elapsed time: 0min 1sec 277ms Migration completed.To validate the migration, you can open the JSON Sink files and view the schema and data.

-- Exported myTable Data [~/nosqlMigrator]$cat myTableJSON < "id" : 10, "document" : < "course" : "Computer Science", "name" : "Neena", "studentid" : 105 >> < "id" : 3, "document" : < "course" : "Computer Science", "name" : "John", "studentid" : 107 >> < "id" : 4, "document" : < "course" : "Computer Science", "name" : "Ruby", "studentid" : 100 >> < "id" : 6, "document" : < "course" : "Bio-Technology", "name" : "Rekha", "studentid" : 104 >> < "id" : 7, "document" : < "course" : "Computer Science", "name" : "Ruby", "studentid" : 100 >> < "id" : 5, "document" : < "course" : "Journalism", "name" : "Rani", "studentid" : 106 >> < "id" : 8, "document" : < "course" : "Computer Science", "name" : "Tom", "studentid" : 103 >> < "id" : 9, "document" : < "course" : "Computer Science", "name" : "Peter", "studentid" : 109 >> < "id" : 1, "document" : < "course" : "Journalism", "name" : "Tracy", "studentid" : 110 >> < "id" : 2, "document" : < "course" : "Bio-Technology", "name" : "Raja", "studentid" : 108 >>-- Exported myTable Schema [~/nosqlMigrator]$cat myTableSchema CREATE TABLE IF NOT EXISTS myTable (id INTEGER, document JSON, PRIMARY KEY(SHARD(id)))This example shows how to use the Oracle NoSQL Database Migrator to copy data and the schema definition of a NoSQL table from Oracle NoSQL Database to Oracle NoSQL Database Cloud Service (NDCS).

As a developer, you are exploring options to avoid the overhead of managing the resources, clusters, and garbage collection for your existing NoSQL Database KVStore workloads. As a solution, you decide to migrate your existing on-premise KVStore workloads to Oracle NoSQL Database Cloud Service because NDCS manages them automatically.

For the demonstration, let us look at how to migrate the data and schema definition of a NoSQL table called myTable from the NoSQL Database KVStore to NDCS. We will also use this use case to show how to run the runMigrator utility by passing a precreated configuration file.

[DEFAULT] tenancy=ocid1.tenancy.oc1. user=ocid1.user.oc1. fingerprint= 43:d1. key_file= pass_phrase=

< "source" : < "type" : "nosqldb", "storeName" : "kvstore", "helperHosts" : [":5000"], "table" : "myTable", "requestTimeoutMs" : 5000 >, "sink" : < "type" : "nosqldb_cloud", "endpoint" : "us-phoenix-1", "table" : "myTable", "compartment" : "developers", "schemaInfo" : < "schemaPath" : "", "readUnits" : 100, "writeUnits" : 100, "storageSize" : 1 >, "credentials" : "", "credentialsProfile" : "DEFAULT", "writeUnitsPercent" : 90, "requestTimeoutMs" : 5000 >, "abortOnError" : true, "migratorVersion" : "1.0.0" >[~/nosqlMigrator/nosql-migrator-1.0.0]$./runMigrator --config Records provided by source=10, Records written to sink=10, Records failed=0. Elapsed time: 0min 10sec 426ms Migration completed.To validate the migration, you can login to your NDCS console and verify that myTable is created with the source data.

This example shows the usage of Oracle NoSQL Database Migrator to copy data from a JSON file source to Oracle NoSQL Database Cloud Service .

After evaluating multiple options, an organization finalizes Oracle NoSQL Database Cloud Service as its NoSQL Database platform. As its source contents are in JSON file format, they are looking for a way to migrate them to Oracle NoSQL Database Cloud Service .

In this example, you will learn to migrate the data from a JSON file called SampleData.json . You run the runMigrator utility by passing a pre-created configuration file. If the configuration file is not provided as a run time parameter, the runMigrator utility prompts you to generate the configuration through an interactive procedure.

SampleData.json is the source file. It contains multiple JSON documents with one document per line, delimited by a new line character.

<"id":6,"val_json":<"array":["q","r","s"],"date":"2023-02-04T02:38:57.520Z","nestarray":[[1,2,3],[10,20,30]],"nested":<"arrayofobjects":[<"datefield":"2023-03-04T02:38:57.520Z","numfield":30,"strfield":"foo54">,],"nestNum":10,"nestString":"bar">,"num":1,"string":"foo">> <"id":3,"val_json":<"array":["g","h","i"],"date":"2023-02-02T02:38:57.520Z","nestarray":[[1,2,3],[10,20,30]],"nested":<"arrayofobjects":[<"datefield":"2023-02-02T02:38:57.520Z","numfield":28,"strfield":"foo3">,],"nestNum":10,"nestString":"bar">,"num":1,"string":"foo">> <"id":7,"val_json":<"array":["a","b","c"],"date":"2023-02-20T02:38:57.520Z","nestarray":[[1,2,3],[10,20,30]],"nested":<"arrayofobjects":[<"datefield":"2023-01-20T02:38:57.520Z","numfield":28,"strfield":"foo">,],"nestNum":10,"nestString":"bar">,"num":1,"string":"foo">> <"id":4,"val_json":<"array":["j","k","l"],"date":"2023-02-03T02:38:57.520Z","nestarray":[[1,2,3],[10,20,30]],"nested":<"arrayofobjects":[<"datefield":"2023-02-03T02:38:57.520Z","numfield":28,"strfield":"foo">,],"nestNum":10,"nestString":"bar">,"num":1,"string":"foo">>[DEFAULT] tenancy=ocid1.tenancy.oc1. user=ocid1.user.oc1. fingerprint= 43:d1. region=us-ashburn-1 key_file= pass_phrase=

create table Migrate_JSON (id INTEGER, val_json JSON, PRIMARY KEY(id));To migrate the JSON source file from SampleData.json to Oracle NoSQL Database Cloud Service , perform the following:

< "source" : < "type" : "file", "format" : "json", "schemaInfo" : < "schemaPath" : "[~/nosql-migrator-1.5.0]/schema_json.ddl" >, "dataPath" : "[~/nosql-migrator-1.5.0]/SampleData.json" >, "sink" : < "type" : "nosqldb_cloud", "endpoint" : "us-ashburn-1", "table" : "Migrate_JSON", "compartment" : "Training-NoSQL", "includeTTL" : false, "schemaInfo" : < "readUnits" : 100, "writeUnits" : 60, "storageSize" : 1, "useSourceSchema" : true >, "credentials" : "/home/user/.oci/config", "credentialsProfile" : "DEFAULT", "writeUnitsPercent" : 90, "overwrite" : true, "requestTimeoutMs" : 5000 >, "abortOnError" : true, "migratorVersion" : "1.5.0" >[~/nosql-migrator-1.5.0]$./runMigrator --config creating source from given configuration: source creation completed creating sink from given configuration: sink creation completed creating migrator pipeline migration started [cloud sink] : start loading DDLs [cloud sink] : executing DDL: create table Migrate_JSON (id INTEGER, val_json JSON, PRIMARY KEY(id)),limits: [100, 60, 1] [cloud sink] : completed loading DDLs [cloud sink] : start loading records [json file source] : start parsing JSON records from file: SampleData.json [INFO] migration completed. Records provided by source=4, Records written to sink=4, Records failed=0, Records skipped=0. Elapsed time: 0min 5sec 778ms Migration completed.To validate the migration, you can log in to your Oracle NoSQL Database Cloud Service console and verify that the Migrate_JSON table is created with the source data. For the procedure to access the console, see Accessing the Service from the Infrastructure Console article in the Oracle NoSQL Database Cloud Service document.

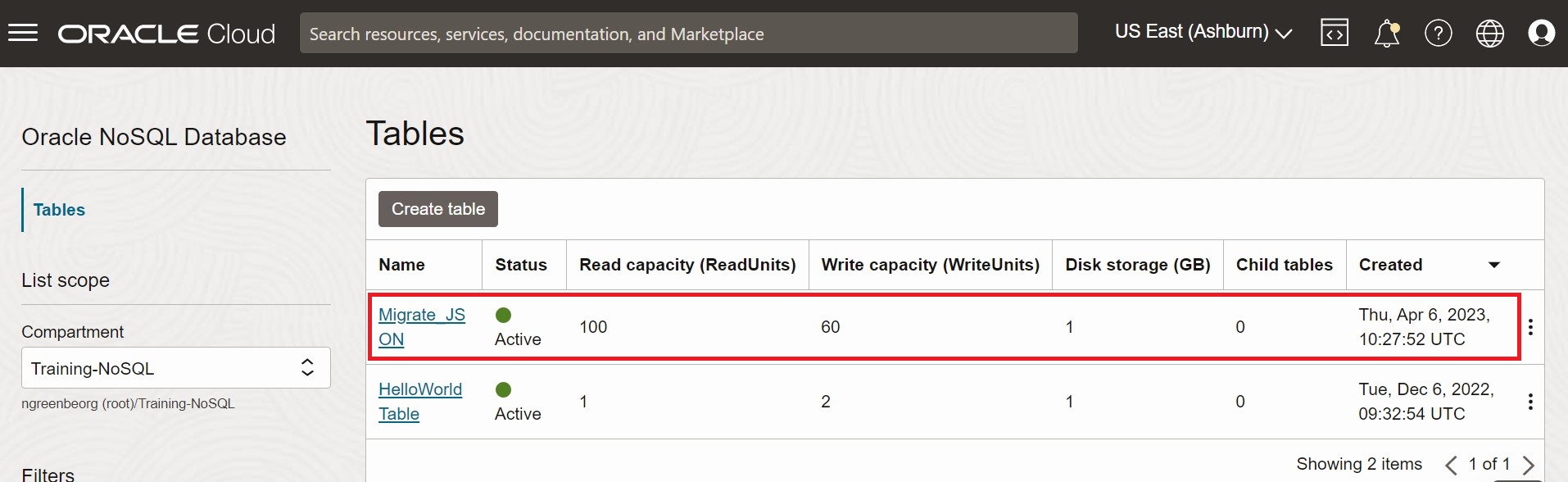

Figure - Oracle NoSQL Database Cloud Service Console Tables

Description of "Figure - Oracle NoSQL Database Cloud Service Console Tables"

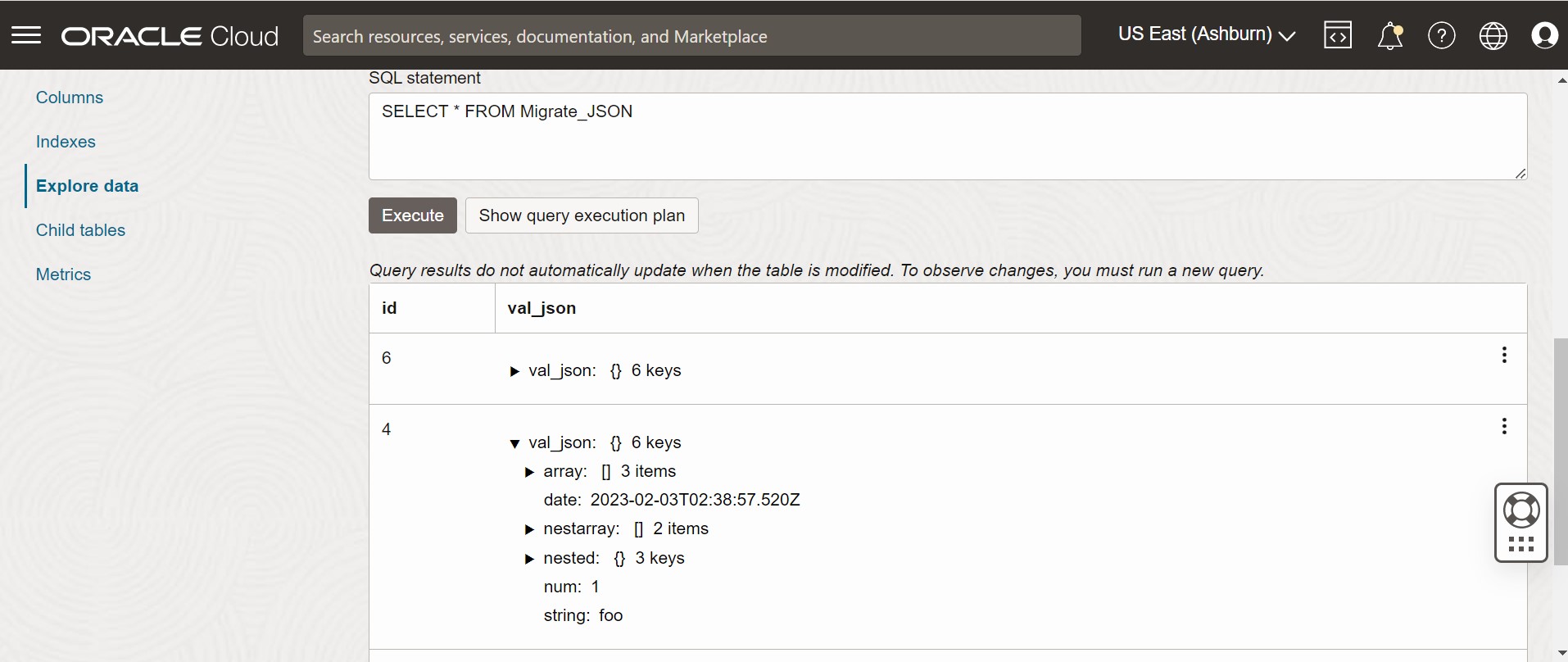

Figure - Oracle NoSQL Database Cloud Service Console Table Data

Description of "Figure - Oracle NoSQL Database Cloud Service Console Table Data"

This example shows how to use the Oracle NoSQL Database Migrator to copy Mongo-DB Formatted Data to the Oracle NoSQL Database Cloud Service (NDCS).

After evaluating multiple options, an organization finalizes Oracle NoSQL Database Cloud Service as its NoSQL Database platform. As its NoSQL tables and data are in MongoDB, they are looking for a way to migrate those tables and data to Oracle NDCS.

You can copy a file or directory containing the MongoDB exported JSON data for migration by specifying the file or directory in the source configuration template.

A sample MongoDB-formatted JSON File is as follows:MongoDB supports two types of extensions to the JSON format of files, Canonical mode and Relaxed mode . You can supply the MongoDB-formatted JSON file that is generated using the mongoexport tool in either Canonical or Relaxed mode. Both the modes are supported by the NoSQL Database Migrator for migration.

For more information on the MongoDB Extended JSON (v2) file, See mongoexport_formats.

For more information on the generation of MongoDB-formatted JSON file, See mongoexport.

For the demonstration, let us look at how to migrate a MongoDB-formatted JSON file to NDCS. We will use a manually created configuration file for this example.

CREATE TABLE IF NOT EXISTS (ID STRING, DOCUMENT JSON,PRIMARY KEY(SHARD(ID)) [DEFAULT] tenancy=ocid1.tenancy.oc1. user=ocid1.user.oc1. fingerprint= 43:d1. key_file= pass_phrase=

To migrate the MongoDB-formatted JSON data to the Oracle NoSQL Database Cloud Service :

< "source" : < "type" : "file", "format" : "mongodb_json", "dataPath" : "" >, "sink" : < "type" : "nosqldb_cloud", "endpoint" : "us-phoenix-1", "table" : "mongoImport", "compartment" : "developers", "schemaInfo" : < "defaultSchema" : true, "readUnits" : 100, "writeUnits" : 60, "storageSize" : 1 >, "credentials" : "", "credentialsProfile" : "DEFAULT", "writeUnitsPercent" : 90, "requestTimeoutMs" : 5000 >, "abortOnError" : true, "migratorVersion" : "1.0.0" >[~/nosqlMigrator/nosql-migrator-1.0.0]$./runMigrator --config Records provided by source=29,353, Records written to sink=29,353, Records failed=0. Elapsed time: 9min 9sec 630ms Migration completed.To validate the migration, you can login to your NDCS console and verify that myTable is created with the source data.

This example shows how to use the Oracle NoSQL Database Migrator to copy DynamoDB JSON file to Oracle NoSQL Database.

After evaluating multiple options, an organization finalizes Oracle NoSQL Database over DynamoDB database. The organization wants to migrate their tables and data from DynamoDB to Oracle NoSQL Database (On-premises).

You can migrate a file or directory containing the DynamoDB exported JSON data from a file system by specifying the path in the source configuration template.

A sample DynamoDB-formatted JSON File is as follows:,"Phones":<"L":[<"L":[<"S":"555-222">,]>]>,"PremierCustomer":,"Address":,"Street":,"DoorNum":,"City":>>,"FirstName":,"FavNumbers":,"LastName":,"FavColors":,"Age":>> ,"Phones":<"L":[<"L":[<"S":"222-222">]>]>,"PremierCustomer":,"Address":,"Street":,"DoorNum":,"City":>>,"FirstName":,"FavNumbers":,"LastName":,"FavColors":,"Age":>> You copy the exported DynamoDB table data from AWS S3 storage to a local mounted file system.

For this demonstration, you will learn how to migrate a DynamoDB JSON file to Oracle NoSQL Database(On-premises). You will use a manually created configuration file for this example.

/ 01639372501551-bb4dd8c3 |-- 01639372501551-bb4dd8c3 ==> exported data prefix |----data |------sxz3hjr3re2dzn2ymgd2gi4iku.json.gz ==>table data |----manifest-files.json |----manifest-files.md5 |----manifest-summary.json |----manifest-summary.md5 |----_starteddownload-dir/01639372501551-bb4dd8c3 |----data |------sxz3hjr3re2dzn2ymgd2gi4iku.json.gz ==>table data |----manifest-files.json |----manifest-files.md5 |----manifest-summary.json |----manifest-summary.md5 |----_startedHere the defaultSchema is TRUE and so the migrator creates the default schema at the sink. You need to specify the DDBPartitionKey and the corresponding NoSQL column type. Else an error is thrown.

< "source" : < "type" : "file", "format" : "dynamodb_json", "dataPath" : "" >, "sink" : < "type" : "nosqldb", "table" : "", "storeName" : "kvstore", "helperHosts" : [":5000"] "schemaInfo" : < "defaultSchema" : true, "DDBPartitionKey" : "", >, >, "abortOnError" : true, "migratorVersion" : "1.0.0" >CREATE TABLE IF NOT EXISTS (DDBPartitionKey_name DDBPartitionKey_type, [DDBSortKey_name DDBSortKey_type], DOCUMENT JSON, PRIMARY KEY(SHARD(DDBPartitionKey_name),[DDBSortKey_name]))Here the defaultSchema is FALSE and you specify the schemaPath as a file containing your DDL statement. See Mapping of DynamoDB types to Oracle NoSQL types for more details.

Note: If the Dynamo DB table has a data type that is not supported in NoSQL, the migration fails. A sample schema file is shown below.CREATE TABLE IF NOT EXISTS sampledynDBImp (AccountId INTEGER,document JSON, PRIMARY KEY(SHARD(AccountId)));The schema file is used to create the table at the sink as part of the migration. As long as the primary key data is provided, the input JSON record will be inserted, otherwise it throws an error.

Note: If the input data does not contain a value for a particular column(other than the primary key) then the column default value will be used. The default value should be part of the column definition while creating the table. For example id INTEGER not null default 0 . If the column does not have a default definition then SQL NULL is inserted if no values are provided for the column.

< "source" : < "type" : "file", "format" : "dynamodb_json", "dataPath" : "" >, "sink" : < "type" : "nosqldb", "table" : "", "schemaInfo" : < "defaultSchema" : false, "readUnits" : 100, "writeUnits" : 60, "schemaPath" : "", "storageSize" : 1 >, "storeName" : "kvstore", "helperHosts" : [":5000"] >, "abortOnError" : true, "migratorVersion" : "1.0.0" >[~/nosqlMigrator/nosql-migrator-1.0.0]$./runMigrator --config Records provided by source=7. Records written to sink=7, Records failed=0, Records skipped=0. Elapsed time: 0 min 2sec 50ms Migration completed. java -jar lib/sql.jar -helper-hosts localhost:5000 -store kvstore

Verify that the new table is created with the source data:

desc SELECT * from

This example shows how to use the Oracle NoSQL Database Migrator to copy DynamoDB JSON file stored in an AWS S3 store to the Oracle NoSQL Database Cloud Service (NDCS).

After evaluating multiple options, an organization finalizes Oracle NoSQL Database Cloud Service over DynamoDB database. The organization wants to migrate their tables and data from DynamoDB to Oracle NoSQL Database Cloud Service.

You can migrate a file containing the DynamoDB exported JSON data from the AWS S3 storage by specifying the path in the source configuration template.

A sample DynamoDB-formatted JSON File is as follows:,"Phones":<"L":[<"L":[<"S":"555-222">,]>]>,"PremierCustomer":,"Address":,"Street":,"DoorNum":,"City":>>,"FirstName":,"FavNumbers":,"LastName":,"FavColors":,"Age":>> ,"Phones":<"L":[<"L":[<"S":"222-222">]>]>,"PremierCustomer":,"Address":,"Street":,"DoorNum":,"City":>>,"FirstName":,"FavNumbers":,"LastName":,"FavColors":,"Age":>> You export the DynamoDB table to AWS S3 storage as specified in Exporting DynamoDB table data to Amazon S3.

For this demonstration, you will learn how to migrate a DynamoDB JSON file in an AWS S3 source to NDCS. You will use a manually created configuration file for this example.

Refer to steps provided in Exporting DynamoDB table data to Amazon S3 to export your table. While exporting, you select the format as DynamoDB JSON . The exported data contains DynamoDB table data in multiple gzip files as shown below.

/ 01639372501551-bb4dd8c3 |-- 01639372501551-bb4dd8c3 ==> exported data prefix |----data |------sxz3hjr3re2dzn2ymgd2gi4iku.json.gz ==>table data |----manifest-files.json |----manifest-files.md5 |----manifest-summary.json |----manifest-summary.md5 |----_started[DEFAULT] tenancy=ocid1.tenancy.oc1. user=ocid1.user.oc1. fingerprint= 43:d1. key_file= pass_phrase=

Here the defaultSchema is TRUE and so the migrator creates the default schema at the sink. You need to specify the DDBPartitionKey and the corresponding NoSQL column type. Else an error is thrown.

< "source" : < "type" : "aws_s3", "format" : "dynamodb_json", "s3URL" : "./export_path>", "credentials" : "", "credentialsProfile" : >, "sink" : < "type" : "nosqldb_cloud", "endpoint" : "", "table" : "", "compartment" : "", "schemaInfo" : < "defaultSchema" : true, "readUnits" : 100, "writeUnits" : 60, "DDBPartitionKey" : "", "storageSize" : 1 >, "credentials" : "", "credentialsProfile" : "DEFAULT", "writeUnitsPercent" : 90, "requestTimeoutMs" : 5000 >, "abortOnError" : true, "migratorVersion" : "1.0.0" >CREATE TABLE IF NOT EXISTS (DDBPartitionKey_name DDBPartitionKey_type, [DDBSortKey_name DDBSortKey_type], DOCUMENT JSON, PRIMARY KEY(SHARD(DDBPartitionKey_name),[DDBSortKey_name]))Here the defaultSchema is FALSE and you specify the schemaPath as a file containing your DDL statement. See Mapping of DynamoDB types to Oracle NoSQL types for more details.

Note: If the Dynamo DB table has a data type that is not supported in NoSQL, the migration fails. A sample schema file is shown below.CREATE TABLE IF NOT EXISTS sampledynDBImp (AccountId INTEGER,document JSON, PRIMARY KEY(SHARD(AccountId)));The schema file is used to create the table at the sink as part of the migration. As long as the primary key data is provided, the input JSON record will be inserted, otherwise it throws an error.

Note: If the input data does not contain a value for a particular column(other than the primary key) then the column default value will be used. The default value should be part of the column definition while creating the table. For example id INTEGER not null default 0 . If the column does not have a default definition then SQL NULL is inserted if no values are provided for the column.

< "source" : < "type" : "aws_s3", "format" : "dynamodb_json", "s3URL" : "./export_path>", "credentials" : "", "credentialsProfile" : >, "sink" : < "type" : "nosqldb_cloud", "endpoint" : "", "table" : "", "compartment" : "", "schemaInfo" : < "defaultSchema" : false, "readUnits" : 100, "writeUnits" : 60, "schemaPath" : "", "storageSize" : 1 >, "credentials" : "", "credentialsProfile" : "DEFAULT", "writeUnitsPercent" : 90, "requestTimeoutMs" : 5000 >, "abortOnError" : true, "migratorVersion" : "1.0.0" >[~/nosqlMigrator/nosql-migrator-1.0.0]$./runMigrator --config Records provided by source=7. Records written to sink=7, Records failed=0, Records skipped=0. Elapsed time: 0 min 2sec 50ms Migration completed.You can login to your NDCS console and verify that the new table is created with the source data.

This example shows the usage of Oracle NoSQL Database Migrator to perform cross-region migration.

An organization uses Oracle NoSQL Database Cloud Service to store and manage its data. It decides to replicate data from an existing region to a newer region for testing purposes before the new region can be launched for the production environment.

In this use case, you will learn to use the NoSQL Database Migrator to copy data from the user_data table in the Ashburn region to the Phoenix region.

You run the runMigrator utility by passing a pre-created configuration file. If you don't provide the configuration file as a runtime parameter, the runMigrator utility prompts you to generate the configuration through an interactive procedure.

<"id":40,"firstName":"Joanna","lastName":"Smith","otherNames":[<"first":"Joanna","last":"Smart">],"age":null,"income":75000,"address":<"city":"Houston","number":401,"phones":[<"area":null,"kind":"work","number":1618955>,,],"state":"TX","street":"Tex Ave","zip":95085>,"connections":[70,30,40],"email":"joanna.smith123@reachmail.com","communityService":"**"> <"id":10,"firstName":"John","lastName":"Smith","otherNames":[<"first":"Johny","last":"Good">,,,],"age":22,"income":45000,"address":,,],"state":"CA","street":"Pacific Ave","zip":95008>,"connections":[30,55,43],"email":"john.smith@reachmail.com","communityService":"****"> <"id":20,"firstName":"Jane","lastName":"Smith","otherNames":[<"first":"Jane","last":"BeGood">],"age":22,"income":55000,"address":,,],"state":"CA","street":"Atlantic Ave","zip":95005>,"connections":[40,75,63],"email":"jane.smith201@reachmail.com","communityService":"*****"> <"id":30,"firstName":"Adam","lastName":"Smith","otherNames":[<"first":"Adam","last":"BeGood">],"age":45,"income":75000,"address":<"city":"Houston","number":301,"phones":[<"area":618,"kind":"work","number":6618955>,,],"state":"TX","street":"Indian Ave","zip":95075>,"connections":[60,45,73],"email":"adam.smith201reachmail.com","communityService":"***">Identify the sink table schema.

You can use the same table name and schema as the source table. For information on other schema options, see Identify the Source and Sink topic in Workflow for Oracle NoSQL Database Migrator

In this example, the regions are under different tenancies. The DEFAULT profile includes OCI credentials for the Ashburn region and DEFAULT2 includes OCI credentials for the Phoenix region.

In the migrator configuration file endpoint parameter (both source and sink configuration templates), you can provide either the service endpoint URL or the region ID of the regions. For the list of data regions supported for Oracle NoSQL Database Cloud Service and their service endpoint URLs, see Data Regions and Associated Service URLs in the Oracle NoSQL Database Cloud Service document.

[DEFAULT] user=ocid1.user.oc1. fingerprint=fd:96. tenancy=ocid1.tenancy.oc1. region=us-ashburn-1 key_file= pass_phrase=abcd [DEFAULT2] user=ocid1.user.oc1. fingerprint=1b:68. tenancy=ocid1.tenancy.oc1. region=us-phoenix-1 key_file= pass_phrase=23456< "source" : < "type" : "nosqldb_cloud", "endpoint" : "us-ashburn-1", "table" : "user_data", "compartment" : "ocid1.compartment.oc1..aaaaaaaahcrgrgptoaq4cgpoymd32ti2ql4sdpu5puroausdf4og55z4tnya", "credentials" : "/home//.oci/config", "credentialsProfile" : "DEFAULT", "readUnitsPercent" : 100, "includeTTL" : false, "requestTimeoutMs" : 5000 >, "sink" : < "type" : "nosqldb_cloud", "endpoint" : "us-phoenix-1", "table" : "user_data", "compartment" : "ocid1.compartment.oc1..aaaaaaaaleiwplazhwmicoogv3tf4lum4m4nzbcv5wfjmoxuz3doreagvdma", "includeTTL" : false, "schemaInfo" : < "readUnits" : 100, "writeUnits" : 60, "storageSize" : 1, "useSourceSchema" : true >, "credentials" : "/home//.oci/config", "credentialsProfile" : "DEFAULT2", "writeUnitsPercent" : 90, "overwrite" : true, "requestTimeoutMs" : 5000 >, "abortOnError" : true, "migratorVersion" : "1.5.0" >[~/nosql-migrator-1.5.0]$./runMigrator --config creating source from given configuration: source creation completed creating sink from given configuration: sink creation completed creating migrator pipeline migration started [cloud sink] : start loading DDLs [cloud sink] : executing DDL: CREATE TABLE IF NOT EXISTS user_data (id INTEGER, firstName STRING, lastName STRING, otherNames ARRAY(RECORD(first STRING, last STRING)), age INTEGER, income INTEGER, address JSON, connections ARRAY(INTEGER), email STRING, communityService STRING, PRIMARY KEY(SHARD(id))),limits: [100, 60, 1] [cloud sink] : completed loading DDLs [cloud sink] : start loading records migration completed. Records provided by source=5, Records written to sink=5, Records failed=0, Records skipped=0. Elapsed time: 0min 5sec 603ms Migration completed.To validate the migration, you can log in to your Oracle NoSQL Database Cloud Service console in the Phoenix region. Verify that the source data from the user_data table in the Ashburn region is copied to the user_data table in this region. For the procedure to access the console, see Accessing the Service from the Infrastructure Console article.

This example shows the usage of Oracle NoSQL Database Migrator from a Cloud Shell.

A start-up venture plans to use Oracle NoSQL Database Cloud Service as its data storage solution. The company wants to use Oracle NoSQL Database Migrator to copy data from a table in the Oracle NoSQL Database Cloud Service to OCI Object Storage to make periodic backups of their data. As a cost-effective measure, they want to run the NoSQL Database Migrator utility from the Cloud Shell, which is accessible to all the OCI users.

In this use case, you will learn to copy the NoSQL Database Migrator utility to a Cloud Shell in the subscribed region and perform a data migration. You migrate the source data from Oracle NoSQL Database Cloud Service table to a JSON file in the OCI Object Storage.

You run the runMigrator utility by passing a pre-created configuration file. If you don't provide the configuration file as a runtime parameter, the runMigrator utility prompts you to generate the configuration through an interactive procedure.

If the Object Storage Bucket is in a different compartment, ensure that you have the privileges to write objects in the bucket. For more details on setting the policies, see Let users write objects to Object Storage buckets.

To back up the NDCSupload table from Oracle NoSQL Database Cloud Service to a JSON file in the OCI Object Storage Bucket using the Cloud Shell, perform the following:

< "source" : < "type" : "nosqldb_cloud", "endpoint" : "us-ashburn-1", "table" : "NDCSupload", "compartment" : "ocid1.compartment.oc1..aaaaaaaahcrgrgptoaq4cgpoymd32ti2ql4sdpu5puroausdf4og55z4tnya", "useDelegationToken" : true, "readUnitsPercent" : 90, "includeTTL" : true, "requestTimeoutMs" : 5000 >, "sink" : < "type" : "object_storage_oci", "format" : "json", "endpoint" : "us-ashburn-1", "namespace" : "", "bucket" : "Migrate_oci", "prefix" : "Delegation", "chunkSize" : 32, "compression" : "", "useDelegationToken" : true >, "abortOnError" : true, "migratorVersion" : "1.6.0" >[~/nosql-migrator-1.6.0]$./runMigrator --config [INFO] creating source from given configuration: [INFO] source creation completed [INFO] creating sink from given configuration: [INFO] sink creation completed [INFO] creating migrator pipeline [INFO] migration started [INFO] [OCI OS sink] : writing table schema to Delegation/Schema/schema.ddl [INFO] [OCI OS sink] : start writing records with prefix Delegation [INFO] migration completed. Records provided by source=4,Records written to sink=4,Records failed=0. Elapsed time: 0min 0sec 486ms Migration completed.Note: Data is copied to the file: Migrate_oci/NDCSupload/Delegation/Data/000000.json Depending on the chunkSize parameter in the sink configuration template, the source data can be split into several JSON files in the same directory. The schema is copied to the file: Migrate_oci/NDCStable1/Delegation/Schema/schema.ddl

ValidationTo validate your data backup, log in to the Oracle NoSQL Database Cloud Service console. Navigate through the menus, Storage > Object Storage & Archive Storage > Buckets . Access the files from the NDCSupload/Delegation directory in the Migrate_oci bucket. For the procedure to access the console, see Accessing the Service from the Infrastructure Console article.

This example shows the usage of Oracle NoSQL Database Migrator to copy data from a CSV file to Oracle NoSQL Database .

After evaluating multiple options, an organization finalizes Oracle NoSQL Database as its NoSQL Database platform. As its source contents are in CSV file format, they are looking for a way to migrate them to Oracle NoSQL Database .

In this example, you will learn to migrate the data from a CSV file called course.csv , which contains information about various courses offered by a university. You generate the configuration file from the runMigrator utility.

You can also prepare the configuration file with the identified source and sink details. See Oracle NoSQL Database Migrator Reference.

cat [~/nosql-migrator-1.5.0]/course.csv 1,"Computer Science", "San Francisco", "2500" 2,"Bio-Technology", "Los Angeles", "1200" 3,"Journalism", "Las Vegas", "1500" 4,"Telecommunication", "San Francisco", "2500"

cat [~/nosql-migrator-1.5.0]/mytable_schema.ddl create table course (id INTEGER, name STRING, location STRING, fees INTEGER, PRIMARY KEY(id));

To migrate the CSV file data from course.csv to Oracle NoSQL Database Service, perform the following steps:

[~/nosql-migrator-1.5.0]$./runMigratorYou can choose a location for the configuration file or retain the default location by pressing the Enter key .

Configuration file is not provided. Do you want to generate configuration? (y/n) [n]: y Generating a configuration file interactively. Enter a location for your config [./migrator-config.json]: ./migrator-config.json already exist. Do you want to overwrite?(y/n) [n]: y

Select the source: 1) nosqldb 2) nosqldb_cloud 3) file 4) object_storage_oci 5) aws_s3 #? 3 Configuration for source type=file Select the source file format: 1) json 2) mongodb_json 3) dynamodb_json 4) csv #? 4

Enter path to a file or directory containing csv data: [~/nosql-migrator-1.5.0]/course.csv Does the CSV file contain a headerLine? (y/n) [n]: n Do you want to reorder the column names of NoSQL table with respect to CSV file columns? (y/n) [n]: n Provide the CSV file encoding. The supported encodings are: UTF-8,UTF-16,US-ASCII,ISO-8859-1. [UTF-8]: Do you want to trim the tailing spaces? (y/n) [n]: n

Select the sink: 1) nosqldb 2) nosqldb_cloud #? 1 Configuration for sink type=nosqldb Enter store name of the Oracle NoSQL Database: mystore Enter comma separated list of host:port of Oracle NoSQL Database: :5000

Enter fully qualified table name: course

Include TTL data? If you select 'yes' TTL value provided by the source will be set on imported rows. (y/n) [n]: n

Would you like to create table as part of migration process? Use this option if you want to create table through the migration tool. If you select yes, you will be asked to provide a file that contains table DDL or to use schema provided by the source or default schema. (y/n) [n]: y Enter path to a file containing table DDL: [~/nosql-migrator-1.5.0]/mytable_schema.ddl Is the store secured? (y/n) [y]: n would you like to overwrite records which are already present? If you select 'no' records with same primary key will be skipped [y/n] [y]: y Enter store operation timeout in milliseconds. [5000]: Would you like to add transformations to source data? (y/n) [n]: n

Would you like to continue migration if any data fails to be migrated? (y/n) [n]: n

Generated configuration is: < "source" : < "type" : "file", "format" : "csv", "dataPath" : "[~/nosql-migrator-1.5.0]/course.csv", "hasHeader" : false, "csvOptions" : < "encoding" : "UTF-8", "trim" : false >>, "sink" : < "type" : "nosqldb", "storeName" : "mystore", "helperHosts" : [":5000"], "table" : "migrated_table", "query" : "", "includeTTL" : false, "schemaInfo" : < "schemaPath" : "[~/nosql-migrator-1.5.0]/mytable_schema.ddl" >, "overwrite" : true, "requestTimeoutMs" : 5000 >, "abortOnError" : true, "migratorVersion" : "1.5.0" >

Note : If you select n , you can use the generated configuration file to perform the migration. Specify the ./runMigrator -c or the ./runMigrator --config option.

Would you like to run the migration with above configuration? If you select no, you can use the generated configuration file to run the migration using: ./runMigrator --config ./migrator-config.json (y/n) [y]: y

creating source from given configuration: source creation completed creating sink from given configuration: sink creation completed creating migrator pipeline migration started [nosqldb sink] : start loading DDLs [nosqldb sink] : executing DDL: create table course (id INTEGER, name STRING, location STRING, fees INTEGER, PRIMARY KEY(id)) [nosqldb sink] : completed loading DDLs [nosqldb sink] : start loading records [csv file source] : start parsing CSV records from file: course.csv migration completed. Records provided by source=4, Records written to sink=4, Records failed=0,Records skipped=0. Elapsed time: 0min 0sec 559ms Migration completed.

Validation

Start the SQL prompt in your KVStore.

java -jar lib/sql.jar -helper-hosts localhost:5000 -store kvstore

Verify that the new table is created with the source data:

sql-> select * from course; 4 rows returned